Deploying QuasarDB in cloud-native environments has been possible for many years using the official Docker containers we provide. With the recent rise of container orchestration platforms such as Kubernetes, we can do much more: a single interface to provision, monitor and operate a QuasarDB cluster.

In this post, we will demonstrate an approach to deploy QuasarDB in a Kubernetes cluster, and discuss the various tradeoffs.

Choosing a Controller

Kubernetes provides a wide variety of workload controllers we can use. Before we can get started with writing the template, we need to decide how we are going to run QuasarDB, as this will determine what our templates will look like.

ReplicaSet

A ReplicaSet is a useful pattern when you need to have a certain number of identical pods running in parallel. It is very similar to a Deployment, and it’s canonical use case are stateless services such as webservers, which do not need to be able to be aware of each other.

For this reason, it is not a good fit for QuasarDB: we definitely need the ability to have the nodes to discover each other.

StatefulSet

A stateful set is similar to a Deployment, but has an important feature: it provides guarantees about the ordering and uniqueness of pods. Each pod has a unique identify which is persistent across any rescheduling.

This is a great fit for QuasarDB for a number of reasons:

- Due to the persistent identity, we don’t risk a pod being relaunched under a different name/identity, causing unnecessary data migration to occur as well as cluster topology changes..

- The pod identities are deterministic, allowing is to predict the names of other pods in our StatefulSet. This will allow us to implement a mechanism for auto-discovery of pods in a cluster.

Service definition

Due to the mechanism of how QuasarDB and StatefulSets interact, we are going to define two different types of services:

- A headless service, which will guide the identity and naming strategy for our StatefulSet pods. It will provide us with deterministic pod names.

- A regular service, which clients will use to connect to the cluster. With QuasarDB’s cluster discovery mechanism, when a client connects to QuasarDB, the following will happen:

- A client connects to the public service’s ip, for example

qdb://qdb-cs:2836and requests the QuasarDB node topology to discover all the nodes in the cluster. - Kubernetes will forward this request to one of the pods in the cluster.

- The pods identify using the headless service, and will return an array such as

[“qdb://qdb-hs-1:2836”, “qdb://qdb-hs-2:2836”, "qdb://qdb-hs-3:2836”] - Subsequent requests of the client will talk directly through the headless service hostnames.

We define both services as follows:

apiVersion: v1

kind: Service

metadata:

name: qdb-hs

labels:

app: qdb

spec:

ports:

- port: 2836

name: client

- port: 2837

name: control

clusterIP: None

selector:

app: qdb

---

apiVersion: v1

kind: Service

metadata:

name: qdb-cs

labels:

app: qdb

spec:

ports:

- port: 2836

name: client

- port: 2837

name: control

selector:

app: qdb

We now have two distinct services which are mapped to any pods with the app: qdb selector.

StatefulSet definition

We start out by declaring our StatefulSet, again around the app: qdb selector:

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: quasardb

spec:

selector:

matchLabels:

app: qdb

serviceName: qdb-hs

replicas: 3

podManagementPolicy: OrderedReady

template:

metadata:

labels:

app: qdb

spec:

There are a few things worth noting here:

- We explicitly map the StatefulSet to the

qdb-hsheadless service we defined earlier, which implies the pods will assume an identity based on this service's DNS names. - By setting the

podManagementPolicy: OrderedReady, we instructs Kubernetes to launch all the pods, one-by-one, in order from 0-N, and the reverse for termination. It is possible to launch all pods in Parallel as well, but this will cause the QuasarDB cluster stabilization to take much longer.

We can now continue actually defining the pod spec for the StatefulSet.

QuasarDB license key

The best way to manage your QuasarDB license key on Kubernetes is to make use of the built-in secrets management. Assuming you have received a license key, first create it inside Kubernetes as a generic secret:

kubectl create secret generic qdb-secrets --from-file=/my/path/to/quasardb.key

And add this secret as a volume to our pod spec:

volumes:

- name: secrets

secret:

secretName: qdb-secrets

We will later mount this volume directly into the pod, exposing our license key to the QuasarDB daemon.

Container definition

Our pod will feature a single container, for which we can now start the definition. We will base it on the public QuasarDB docker image:

- name: qdbd

image: bureau14/qdb:3.7

imagePullPolicy: "Always"

ports:

- containerPort: 2836

name: client

- containerPort: 2837

name: control

resources:

limits:

memory: "2Gi"

Please note that QuasarDB needs two ports to be available: 2836 which features most of the traffic between client and server, and 2837 which is a low-volume port for cluster control messages.

Per Kubernetes best practices, we recommend setting appropriate resource limits. QuasarDB will automatically detect (and use) the available memory.

Volume mounts

We need to mount two different volumes from different purposes:

- One volume to expose the earlier defined secrets for QuasarDB license keys;

- One volume to maintain a persistent data storage outside of the pod.

As such, we will define these mounts:

volumeMounts:

- name: datadir

mountPath: /opt/qdb/db

- name: secrets

mountPath: "/var/secret/"

readOnly: true

The mount path for the QuasarDB daemon datadir, /opt/qdb/db is hardcoded in our QuasarDB docker image. If you use our official image, you must use this path for persistent storage.

We also expose our secrets into a special directory /var/secret, and will now continue to instruct QuasarDB to look for the license file here through an environment variable.

Environment variables

Earlier we defined a secret qdb-secrets with the our license key file quasardb.key. We have mounted this volume inside our pod in /var/secret/, and can now instruct our Docker container to pick up the license key using the special QDB_LICENSE_FILE environment variable:

env:

- name: QDB_LICENSE_FILE

value: "/var/secret/quasardb.key"

If you're using our official Docker image, this will work out of the box.

Additionally, for our Kubernetes StatefulSet, we must add the following environment variable to our container:

- name: K8S_REPLICA_COUNT

value: "3"

It is required that this value matches the replicas: 3 value of the StatefulSet definition exactly. This variable will trigger an auto-discovery mechanism in the Docker container explained below.

Probes

If a QuasarDB node is told to bootstrap with another peer, it does not start listening on port 2836 until it has successfully connected to the cluster. We can use this feature with Kubernetes readiness/liveness probes, so that Kubernetes is aware of when a node is fully ready.

This works well in conjunction with the "OrderedReady" pod management policy we defined: when provisioning a cluster, Kubernetes will wait for the previous node to become fully ready (i.e. successfully joined the cluster) before allocating new ones.

readinessProbe:

tcpSocket:

port: 2836

initialDelaySeconds: 5

periodSeconds: 10

livenessProbe:

tcpSocket:

port: 2836

initialDelaySeconds: 15

periodSeconds: 20

Finishing touches

Our official Docker container uses reduced permissions by default, running as the qdb user with user id 999. We can make our deployment more secure by telling Kubernetes to use this:

securityContext:

runAsUser: 999

Additionally, as you saw above, we have yet to provision a volume claim template for our datadir volume. In this example we use a plain node-local filesystem. You will need to provision persistent volumes and adjust your volume claim template depending on your environment. The important part is that we require access to exclusive read/write volumes (i.e. ReadWriteOnce access mode):

volumeClaimTemplates:

- metadata:

name: datadir

spec:

accessModes: [ "ReadWriteOnce" ]

volumeMode: Filesystem

resources:

requests:

storage: 10Gi

storageClassName: manual

Launching the cluster

Assuming you saved the template in quasardb.yaml, we can simply launch our 3-node cluster as follows:

$ kubectl apply -f quasardb.yaml

You will now see your cluster launch. We can verify by launching an ad-hoc pod for the QuasarDB shell:

$ kubectl run --generator=run-pod/v1 -ti qdbsh --image=bureau14/qdbsh:3.7 -- --cluster qdb://qdb-cs:2836/

If everything worked correctly, you will now see a qdbsh prompt. Note how the client connects to one single entrypoint, our client-service, and is able to directly communicate with our headless service endpoints from there.

A note about node discovery

We conveniently left this topic out of the discussion above, but it's worth mentioning: how do nodes discover each other in this configuration, and what is the topology?

For this, we mentioned that we are using StatefulSet's deterministic node identities. These node identities are deterministic: in a 3 node cluster, each of these nodes can predict the identity of the other 2 nodes. In order to make this work, we added some special "glue" in our official Docker container to make this work:

if [[ ! -z ${K8S_REPLICA_COUNT} ]]

then

HOST=$(hostname -s)

DOMAIN=$(hostname -d)

if [[ $HOST =~ (.*)-([0-9]+)$ ]]

then

NAME=${BASH_REMATCH[1]}

ORD=${BASH_REMATCH[2]}

NODE_OFFSET=$((ORD + 1))

NODE_ID="${NODE_OFFSET}/${K8S_REPLICA_COUNT}"

echo "Setting node id to ${NODE_ID}"

patch_conf ".local.chord.node_id" "\"${NODE_ID}\""

BOOTSTRAP_PEERS=$(bootstrap_peers ${DOMAIN} ${NAME} ${ORD})

echo "Setting bootstrap peers to ${BOOTSTRAP_PEERS}"

patch_conf ".local.chord.bootstrapping_peers" "${BOOTSTRAP_PEERS}"

fi

fi

What happens here is the following:

- If our Docker container detects the

K8S_REPLICA_COUNTenvironment variable is set, it assumes it is running inside a Kubernetes StatefulSet; - It looks up the hostname and domain name of the current pod's complete DNS name, e.g. the

qdb-1.qdb-hs.default.svc.cluster.local - Based on the first host part

qdb-1, the pod determines its own position inside the StatefulSet; as the numbering starts with0,qdb-1would be position2in our cluster; - Based on this position and the total cluster size, we can assign this node an id

2/3. - Based on this position, we can also determine the hostnames of all other pods in our StatefulSet; i.e..

qdb-0andqdb-2. - We can then automatically patch our configuration:

- Set our own node id to

2/3; - Set our bootstrapping peers appropriately.

We calculate our bootstrap peers by using the following helper function:

function bootstrap_peers {

DOMAIN=$1

HOSTNAME=$2

THIS_REPLICA=$3

RET="["

for ((i=(${THIS_REPLICA} - 1); i>=0; i--))

do

if [[ ! "${RET}" == "[" ]]

then

RET="${RET}, "

fi

THIS_HOST="${HOSTNAME}-${i}.${DOMAIN}"

THIS_IP=$(host_to_ip ${THIS_HOST})

RET="${RET}\"${THIS_IP}:2836\""

done

RET="${RET}]"

echo ${RET}

}

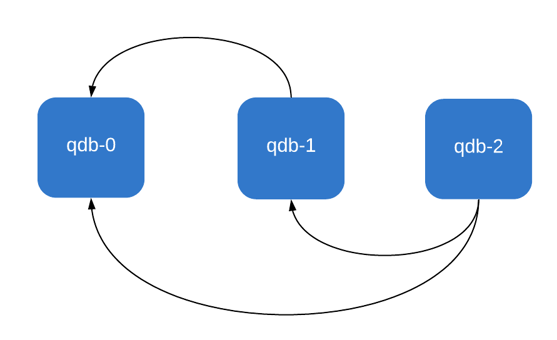

As you can see, our bootstrapping function essentially tries to look for all nodes "prior" to its own position in the cluster. To illustrate, this is how our three nodes would bootstrap:

As you can see, by leveraging Kubernetes StatefulSets and adding a little bit of orchestration glue in a Docker container, we implement auto discovery of nodes.

Conclusion

We hope this article helps you get started with QuasarDB on Kubernetes. If this piqued your interest and you would like to discuss your configuration in more detail, feel free to contact one of our solution architects who will be more than happy to discuss your needs in more detail.

Full template

For completeness, here is the entire template we implemented in this article:

apiVersion: v1

kind: Service

metadata:

name: qdb-hs

labels:

app: qdb

spec:

ports:

- port: 2836

name: client

- port: 2837

name: control

clusterIP: None

selector:

app: qdb

---

apiVersion: v1

kind: Service

metadata:

name: qdb-cs

labels:

app: qdb

spec:

ports:

- port: 2836

name: client

- port: 2837

name: control

selector:

app: qdb

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: quasardb

spec:

selector:

matchLabels:

app: qdb

serviceName: qdb-hs

replicas: 3

podManagementPolicy: OrderedReady

template:

metadata:

labels:

app: qdb

spec:

volumes:

- name: secrets

secret:

secretName: qdb-secrets

containers:

- name: qdbd

image: bureau14/qdb:3.6

imagePullPolicy: "Always"

ports:

- containerPort: 2836

name: client

- containerPort: 2837

name: control

resources:

limits:

memory: "2Gi"

volumeMounts:

- name: datadir

mountPath: /opt/qdb/db

- name: secrets

mountPath: "/var/secret/"

readOnly: true

env:

- name: QDB_LICENSE_FILE

value: "/var/secret/quasardb.key"

- name: K8S_REPLICA_COUNT

value: "3"

readinessProbe:

tcpSocket:

port: 2836

initialDelaySeconds: 5

periodSeconds: 10

livenessProbe:

tcpSocket:

port: 2836

initialDelaySeconds: 15

periodSeconds: 20

securityContext:

runAsUser: 999

volumeClaimTemplates:

- metadata:

name: datadir

spec:

accessModes: [ "ReadWriteOnce" ]

volumeMode: Filesystem

resources:

requests:

storage: 10Gi

storageClassName: manual